Enforcing dynamic security policies when running a distributed coding and data science process

All the technologies and application scenarios discussed here are supported by the strong.network platform for enterprises. Contact us at hello@strong.network for more information. In this article, I explain some of the implementation choices that we took to implement zero-trust principles that

A zero-trust architecture implements security principles that protect data throughout the business operations of a global company. Jointly, the use of an information security standard such as ISO 27001 is a key source to choose security governance policies whose target is shaped by the reach and the aim of the process. I discuss these security principles here and how to tackle the challenges of implementing them. Process Globalization at Scale

Over the last 10 years, the forces of globalization have continually reshaped the business processes of corporations across all types of operations. In the case of development, companies have established centers of excellence, remote outposts and offshore teams to optimize budget allocation across their products and services. These companies have also increased technical collaborations with their peers and smaller partners, often to alleviate a skills shortage and boost their capability to innovate.

What is fairly new is that their modus operandi is now being replicated by smaller companies, i.e. Small and Medium Enterprises (SMEs), thanks to the rise of platforms offering freelancers such as Upwork, Toptal, or simply through workforce outsourcing companies. In sum, the upfront cost to unbundle a product development process across a global team has become low enough such that SMEs can now benefit from the new global data flows (see Mc Kinsey’s, Digital globalization: The new era of global flows). Process globalization can now be done at scale, i.e. across all company sizes. In many of the global development processes today such as the ones mentioned above, computer source code and supporting data are the main IP assets consumed and generated.

Sometimes, the central asset circulated across teams and locations is purely data and the output is an analysis often taking the form of a trained machine learning model for Artificial Intelligence (AI) applications.

The ability to execute such a transaction epitomizes the capability brought by innovation tournament platforms for AI. It consists in pairing a company with an important dataset to the Crowd, i.e. a seemingly unorganized talent pool willing to solve a technical challenge over a short period of time, let’s say two weeks, in exchange for a payday often north of USD 10'000. Platforms such as Kaggle and others are part of these brokers enabling such ephemeral collaborations.

In all the scenarios presented so far, a nagging challenge for companies of any size -and often a significant cost for companies with outsourcing experience- is securing the business processes that support them.

The security challenge at stake here is mainly the control and protection of company IP assets such as source code and data throughout the process. This is ideally done via the implementation of an IT infrastructure such secure Cloud Development Environments (secure CDEs) that securely enables remote work locations, accommodates temporary workers cost-efficiently, and prevents data leaks during ephemeral collaborations, whether between companies or during innovation tournaments. Before we move on to the solution, note that I discuss this security challenge in this other article as well “The Place of Information Security in the Age of Accelerations”. The Demise of the IT Perimeter

The classical way for companies to protect IP assets such as source code and data is to store them on servers in the Cloud or behind a bulwark referred to as the corporate IT perimeter. This way, machines within their premises are trusted to access these assets, whereas the ones outside are not.

The IT perimeter is typically delimited by network elements such as firewalls and routers. Hence once behind these elements, authentication and authorization requirements for hosts are lesser than when they are outside.

The changes in business process reach as explained previously are forcing companies to cater data access to hosts both inside and outside the IT perimeter. The use of Virtual Private Network (VPN) connections is common to provide access to external hosts. However, a fundamental issue is the trust allotted to hosts inside the network, whether connected physically or through VPN. As a result, a single compromised host can lead to an entire network being exposed through a type of attack known as lateral movement. Furthermore, the increased use of cloud resources blurs the distinction between inside and outside hosts.

In contrast, a zero-trust architecture is built on the premise that no host is allotted any default amount of trust and all hosts incur thorough verification. At its core it removes to a host the distinction between being inside or outside. Furthermore it assumes the network is compromised and that insider threats are active. Such an architecture is based on a set of cyber security design principles that implements a strategy which focuses on protecting resources, i.e. the company’s IP assets, as opposed to the network perimeter. Such a focus is actually embraced by best practices and guidelines such as the ones described in information security standards such as ISO 27001 and others in the ISO 27k series. Guidelines span from management practices to security policies towards core business process entities such as users, resources and applications. In short, the zero-trust approach prescribes design principles to create an IT infrastructure that focuses on resource protection by narrowing its scope in terms of access control (referred to as segmentation) and enabling continuous security assessment. In effect, the key benefit of a zero trust approach is the ability for a company to implement granular and dynamic security policies acting on a set of entities such as users, resources and applications.

How to Design a Process Architecture using Zero-Trust Principles

Part of the design process of a zero-trust architecture is first to identify the set of entities that plays a role in accessing resources. In that respect, it is best to start from the business needs, and even in some cases from the nature of the business process to support. A typical scenario is a set of users who through the use of applications get access to resources.

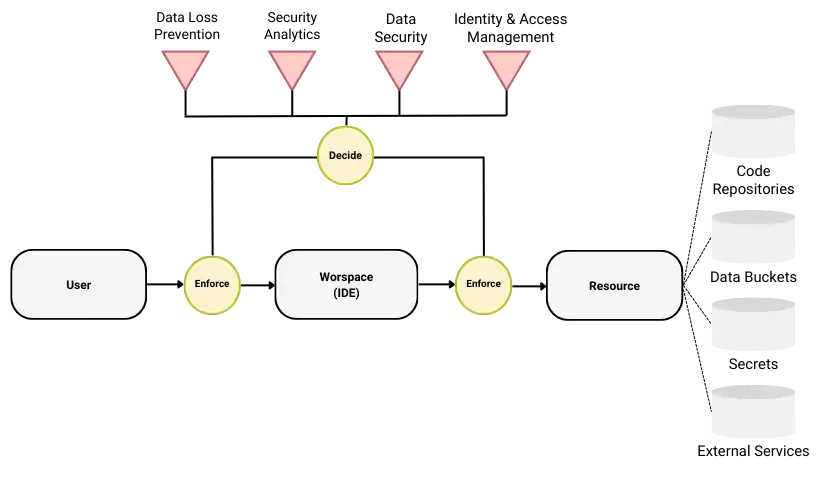

For example, in the case of a development process, users access workspaces (typically Integrated Development Environment, i.e. IDE) with access to resources such as code repositories, data buckets and external services to do their job, i.e. develop code and practice data science. Any of these resources can be deployed on premise or in the Cloud.

The next step is to understand what type of security is necessary for the resources and express them as policies acting on the entities identified at the previous step. Here again, if preserving information security during operations is the goal, a standard such as ISO 27001 is a good source of inspiration. Depending on the industry, specific constraints attached to regulations are also considered.

Because policies are based on attributes that are continuously assessed during operations, the last piece of this mechanism is the identification of a set of security functions to evaluate these attributes. These are your typical security functions such as Identification and Access Management (IAM), Data Security, Endpoint Security, Security Analytics and possibly others depending on the nature of the process. Back to the case of the development process mentioned above, an example of policy could be:

Every confidential or regulated resource shall be accessed from a workspace whose networking and clipboard functions are monitored. In case the user is mobile, networking and clipboard functions are blocked

In addition to taking measures to protect sensitive company data, the above statement also covers a series of requirements from ISO 27001, namely A.12.4.1 Event Logging and A.6.2.1 Mobile Device Policy. Functions that are used to assess the status of this policy are the IAM mechanism -to authenticate and verify that the user has access to the resource-, Security Analytics -to determine when the user is mobile-, and a Data Loss Prevention (DLP) mechanism as endpoint security function. Finally, the last piece necessary to the architecture is a means to define and enforce security policies during operations. The NIST specification defines Policy Decision Points and Policy Enforcement Points that connect security functions to the process entities. The architecture model attached to the above example is represented in the next figure. Yellow circles represent policies and red triangles represent security functions. The data access flow is represented by the arrows.

Once component definitions are in place, design principles are applied using a stepwise, iterative methodology. The first step is often the localization of all predefined entities, i e. users, resources, applications, across the company (or in the Cloud) to which policies should apply.

Hence practitioners will start with the identification of all data sources that require protection. Then, the same task is applied to applications that are used to access data. Generally all users are considered in this process.

I gave a few examples of security functions in the previous text. The choice for these functions depends on the nature of the process and the security goals. Most importantly, these functions are used to assess the value of some of the attributes attached to entities and used to define security policies.

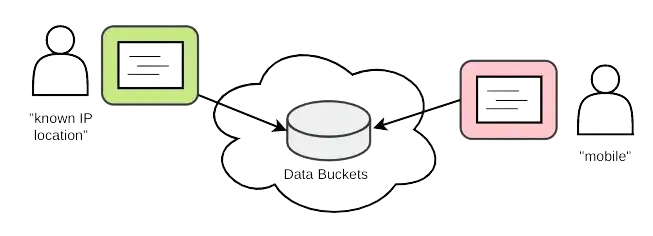

In the policy example given previously, one of the user’s characteristics is whether she is mobile or not. The assessment is likely based on the recognition of her current IP address. Based on this result and the confidentiality attribute of the data source, the DLP function will be configured to abide by the policy. This example fits in the scope of a secure development process, where users can be located anywhere yet source code and data have to be protected at all times, illustrated in the figure below.

Challenges When Implementing Zero-Trust Architectures

It is likely that companies might encounter a few challenges when implementing zero-trust architectures. I discussed some of the typical ones below and how to tackle them. The third and last challenge in the list below, i.e. dealing with architecture scope, is definitively the one that deserves the most attention.

User Experience At first, it is likely that running the zero-trust process will require users to perform more authentication and authorization activities than before. This effect can be easily mitigated by making these activities transparent thanks to technologies that enable the use of a single identity provider and a single sign-on mechanism. Open standards such as OAuth, OpenID Connect and SAML enable the implementation of a mostly transparent authentication and authorization mechanism. In addition, the automated management of private and public key pairs help manage identities across connected tools, e.g. external services, code repositories, data bucket providers, etc. on behalf of users.

Interoperability Considerations of Zero-Trust Products The mitigation of interoperability issues is highly dependent on the vendor’s solution. It is important to verify that solutions are based on open standards. As I explained previously, a good start for assessing the goals of a zero-trust architecture is the business process that requires data security. The needs for interoperability stem from that business process as well.

Architecture Scope An important design principle when building a zero-trust architecture is the definition of the scope. Finding the right scope for (access control) security comes through the application of microsegmentation. This is achieved in multiple ways.

For starters, microsegmentation is a general security principle to reduce the attack surface by limiting access to resources on networks. For example, by narrowing the scope of the zero-trust architecture to a single (type of) process, for example the development process, resources only contributing to this process can be isolated from non-participating users or applications. In turn, this allows the isolation of data access flows only between the process participants. This can be implemented by providing and managing credentials that are only valid in the scope of the process at stake. This is microsegmentation at the business process level. Then, microsegmentation can be used to put a wrapper around the access control to resources. For example, practitioners might define that resources can only be accessed through the SSH protocol, with automatically managed cryptographic keys. This is microsegmentation at the application protocol level (using the terminology based on the OSI model.)

Finally, a connection to a repository is only allowed for whitelisted domains (i.e. IP addresses) or even a specific repository designated by name. This is microsegmentation at the network level, i.e. the whitelisting of specific network destinations, i.e. domain names. The three steps of microsegmentation are represented in the figure below.

In general, the goal of microsegmentation is to give practitioners the ability to set granular security policies that span multiple levels of abstractions, as the ones exemplified above. This greatly reduces the attack surface and allows for easy and accurate auditing of the process operations.

Protecting Resources Using Zero-Trust Architectures

In conclusion, the application of zero-trust architecture design principles is an enabler to deploy dynamic security policies, focusing on protecting resources as opposed to surveilling the network. By breaking free from its IT perimeter, a company can radically improve its security posture in relation to its IP assets, in particular when deploying its business processes globally. It allows the company to support business scenarios such as the ones introduced at the beginning of this discussion, i.e. outsourcing of business activities, remote teams, collaboration settings, crowd-based innovation tournaments, etc.

Security policies are part of the company’s information security program and are likely derived from industry-specific regulations, but are also based on information security standards. The challenge of efficiently deploying a zero-trust architecture partly resides in the ability to focus on a manageable scope. Starting from the business process is likely the more sensible way to proceed. Then microsegmentation is applied such that the attack surface of entities within this context is reduced to a minimum. This, in turn, enables the definition of granular, continuously-assessed security policies that capture the diverse conditions reflected in complex and global business scenarios.

All material in this text can be shared and cited with appropriate credits. For more information about our platform, please contact us at hello@strong.network